In my project, I have a Docker Swarm cluster where Jenkins Agents are dynamically launched to execute all the jobs from Jenkins. Why not in Kubernetes? Because maintaining a Kubernetes cluster just for jobs is not optimal; Swarm is much simpler to manage.

Recently, I set up a Self-Hosted GitLab, but I couldn’t find an official guide on how to launch a GitLab Runner in a Swarm cluster with autoscaling – meaning that the runner would automatically spin up additional containers on Swarm nodes. I had to gather the information bit by bit. Here’s the method I used.

The guide consists of three steps:

- Obtain the registration token

- Distribute the runner configurations to the machines

- Deploy the runners

Obtaining the Runner Authentication Token #

Previously, a runner registration token was used to register runners, but it has now been deprecated. GitLab has started using Runner Authentication Token for connecting runners.

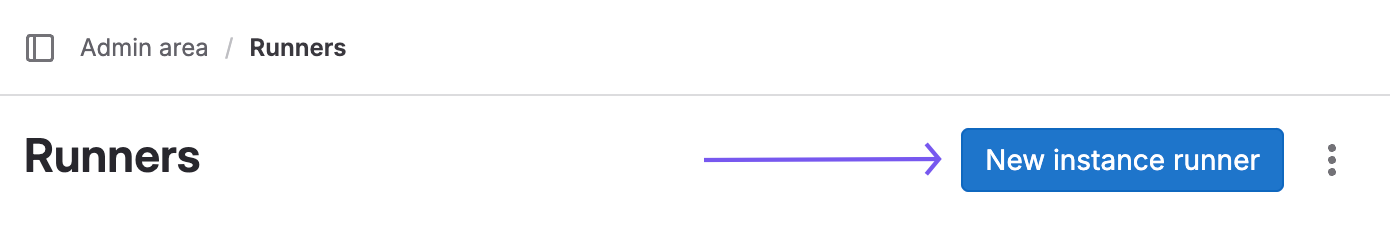

Go to the project, group, or instance settings to register a new Runner: Admin Settings -> CI/CD -> Runners -> New instance runner:

Specify the Tags. Later, we’ll use these tags in our GitLab CI pipelines to ensure they run on specific runners. For example, I use the tag swarm.

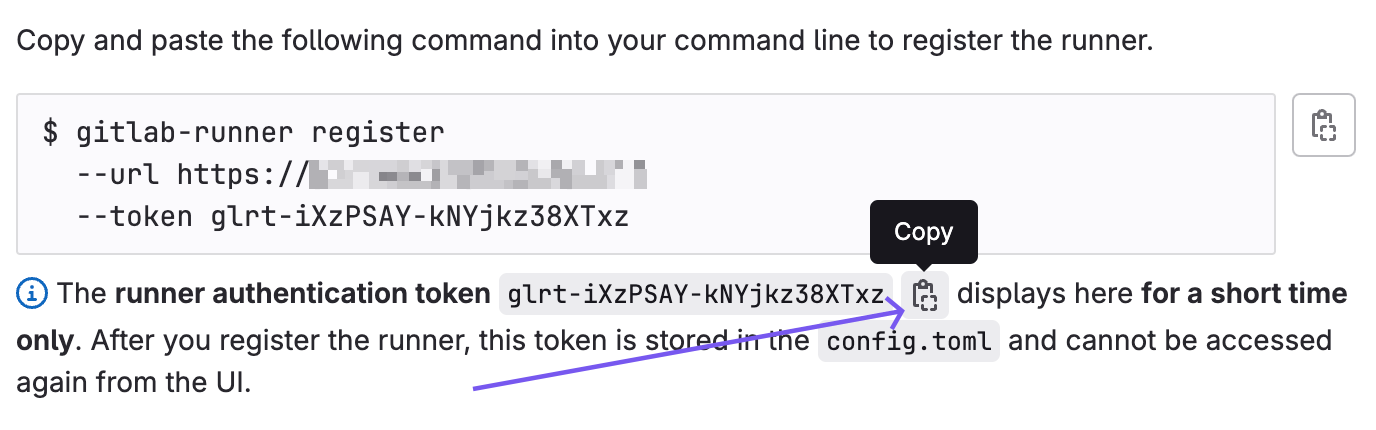

On the next page, copy the token and save it somewhere:

Now, GitLab will be waiting for a runner to register with this token.

Configuring the Runners #

We can launch a container with the GitLab Runner, exec to it, and execute the register command. During registration, GitLab Runner will save the token and minimal settings in the /etc/gitlab/config.toml file inside the container. But instead of manually executing a register command on each container on every machine, let’s create a configuration template and distribute it to the Swarm nodes.

Prepare the configuration file:

concurrent = 5 # Concurrent containers per node

check_interval = 0

[[runners]]

name = "docker-swarm-runner"

url = "https://<YOUR-GITLAB-INSTANCE>/"

token = "<GITLAB AUTHENTICATION TOKEN>"

executor = "docker"

[runners.custom_build_dir]

[runners.docker]

tls_verify = false

image = "alpine:latest" # Default image for jobs

privileged = true # If we need Docker-in-Docker

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/cache"]

shm_size = 0

allowed_pull_policies = ["always", "if-not-present", "never"]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

Replace the token with the one you obtained in the previous step. Also, specify the address of your GitLab instance.

Now, on each Swarm node, you need to create a directory for the configuration and place this file there. How you do this is up to you, but I use Ansible.

Copying the Configuration File to Nodes #

Here’s an example of Ansible code to copy the configuration file:

templates/config.toml.j2:

concurrent = 5

check_interval = 0

[[runners]]

name = "docker-swarm-runner"

url = "{{ gitlab_instance_url }}"

token = "{{ gitlab_runner_swarm_auth_token.value }}"

executor = "docker"

[runners.custom_build_dir]

[runners.docker]

tls_verify = false

image = "alpine:latest" # Default image for jobs, can be overridden in .gitlab-ci.yml

privileged = true # Enable if you need to run Docker-in-Docker or privileged containers

disable_entrypoint_overwrite = false

oom_kill_disable = false

disable_cache = false

volumes = ["/cache"]

shm_size = 0

allowed_pull_policies = ["always", "if-not-present", "never"]

[runners.cache]

[runners.cache.s3]

[runners.cache.gcs]

tasks/main.yml:

- name: Create directories

ansible.builtin.file:

path: /etc/gitlab-runner/config

state: directory

recurse: true

- name: Copy config file

ansible.builtin.template:

src: config.toml.j2

dest: /etc/gitlab-runner/config/config.toml

The configuration file should be on all Swarm cluster nodes.

Deploying GitLab Runner in Swarm #

Swarm works with the familiar docker-compose files, so let’s create one. I also use Ansible for copying, so here’s an example jinja template:

services:

gitlab-runner:

image: gitlab/gitlab-runner:{{ gitlab_runner_image_version }}

deploy:

mode: global

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- /etc/gitlab-runner/config:/etc/gitlab-runner

networks:

- gitlab-net

networks:

gitlab-net:

external: false

- We specify deploy mode: global, so the container is launched on all cluster nodes. This is like a DaemonSet in Kubernetes.

- We connect the directory with the config.toml file.

- Additionally, we mount the Docker socket so that the GitLab runner can manage our Docker engine and create new containers (this is necessary for autoscaling).

- We specify the version of the GitLab Runner container; you can find available versions on Docker Hub.

I copy this file using Ansible:

- name: Copy compose file

ansible.builtin.template:

src: docker-compose.yml.j2

dest: /etc/gitlab-runner/docker-compose.yml

You need to run the Swarm stack from the manager node (the main cluster node), but the file will be on all nodes. It’s redundant, but it’s easier for me this way, so I don’t have to add conditions in Ansible.

Go to the manager node and execute:

docker stack deploy -c docker-compose.yml gitlab-runner

After a minute, check:

root@swarm-node01:/home/ysemyenkov# docker stack ps gitlab-runner

ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS

pnkxq7yf3wm3 gitlab-runner.1tlong-name gitlab/gitlab-runner:ubuntu-v17.3.0 swarm-node01 Running Running 2 days ago

ih4822qch9ew gitlab-runner.3tlong-name gitlab/gitlab-runner:ubuntu-v17.3.0 swarm-node03 Running Running 2 days ago

xzj5yt1cv5ae gitlab-runner.2tlong-name gitlab/gitlab-runner:ubuntu-v17.3.0 swarm-node02 Running Running 2 days ago

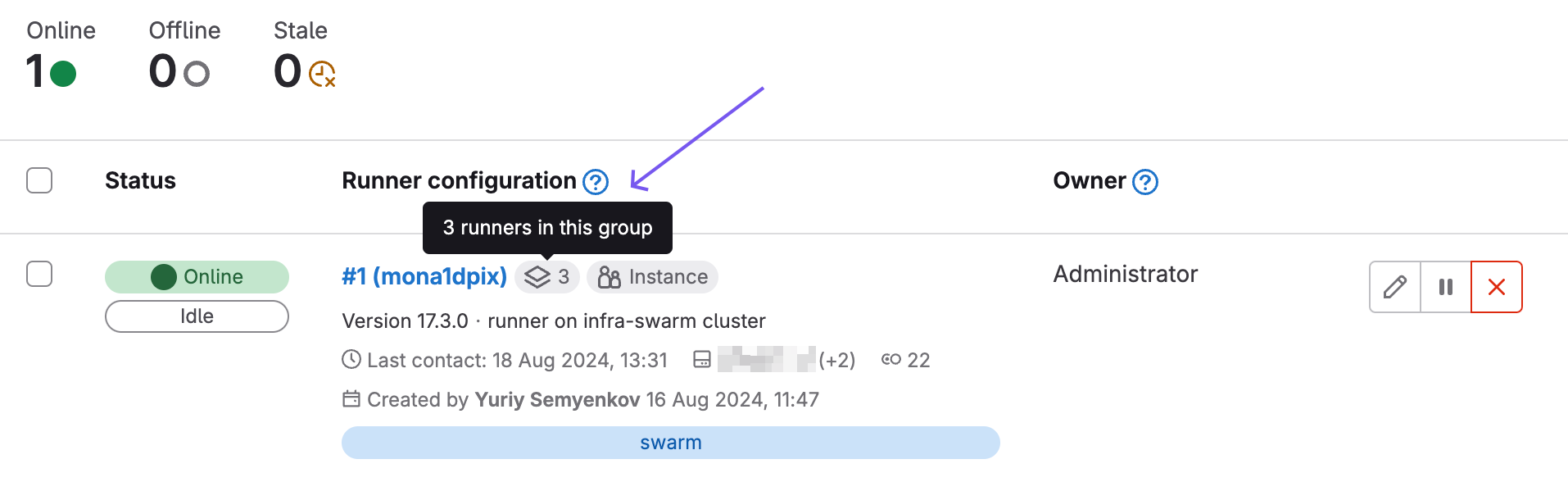

If everything is okay, you’ll see the registered Runner in GitLab:

Testing #

We can create a test pipeline that runs a dozen jobs simultaneously:

stages:

- test

parallel-jobs:

stage: test

tags:

- swarm

script:

- echo "Starting sleep for 600 seconds"

- sleep 600

parallel:

matrix:

- JOB_NAME: "job1"

- JOB_NAME: "job2"

- JOB_NAME: "job3"

- JOB_NAME: "job4"

- JOB_NAME: "job5"

- JOB_NAME: "job6"

- JOB_NAME: "job7"

- JOB_NAME: "job8"

- JOB_NAME: "job9"

- JOB_NAME: "job10"

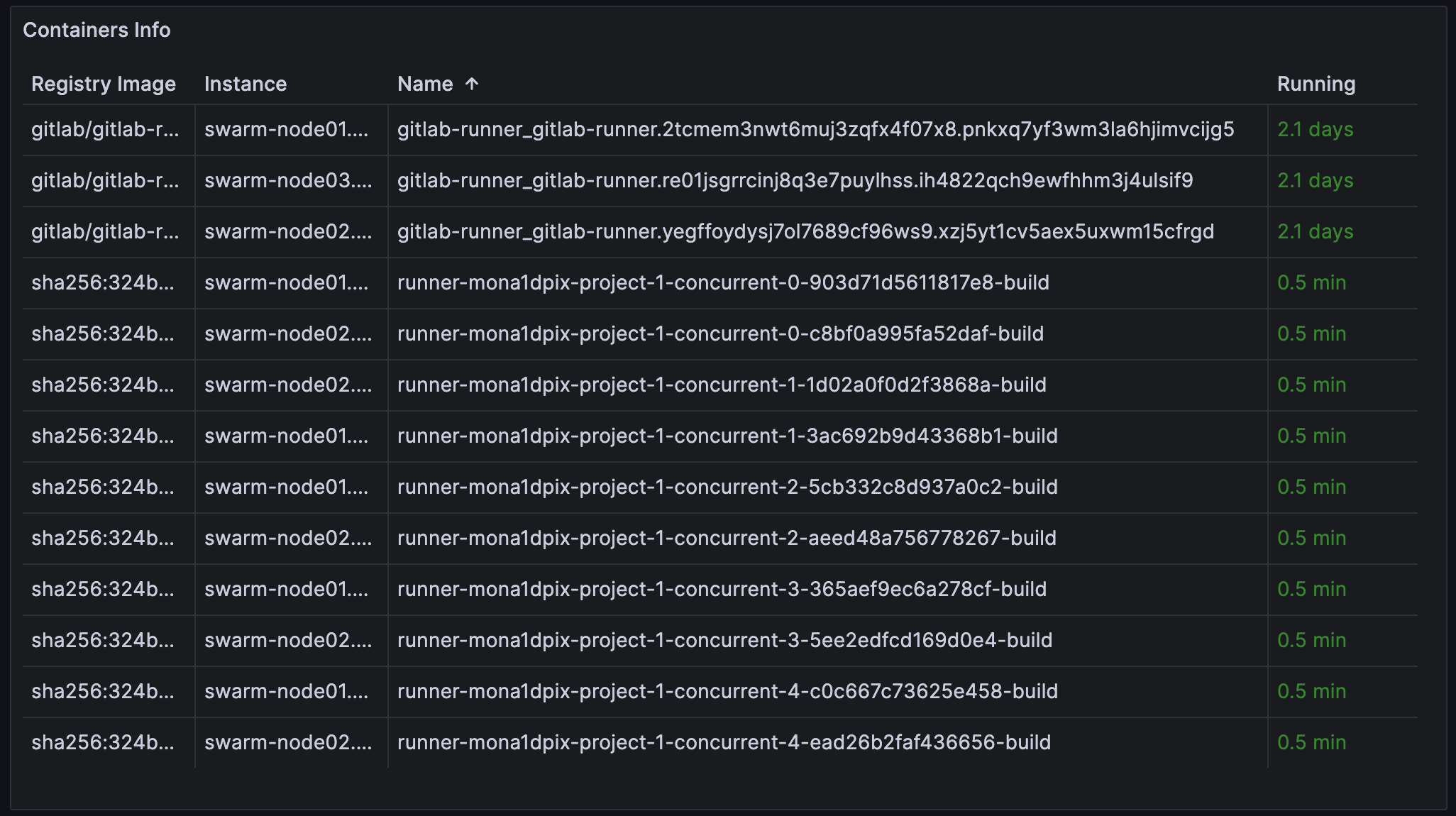

Run the pipeline and see that the containers launched in the required number and on different nodes. You can view it with docker ps command on the nodes or through monitoring tools. For example, I prefer viewing cAdvisor metrics in Grafana:

You can see that the top three containers are the main GitLab Runner processes, which receive tasks and create dynamic containers for jobs. Below are our containers with sleep jobs running simultaneously on different nodes.

That’s it.